Hao Chen

218 Mengminwei Building

Zijingang Campus, Zhejiang Univserity

Hangzhou, China

Hao Chen is a Reseach Professor in the College of Computer Science & the State Key Laboratory of CAD&CG at Zhejiang University. Before joining ZJU in 2022, he was a senior research at Huawei Noah’s Ark Lab (天才少年计划). He received his Ph.D degree in Adelaide Intelligent Machines Group at the University of Adelaide advised by Professor Chunhua Shen. Before coming to Adelaide he obtained his undergraduate and master degree from Zhejiang University, and had been a researcher in NetEase Inc.

His general research interest lies in foundation models of Computer Vision. His recent research involves:

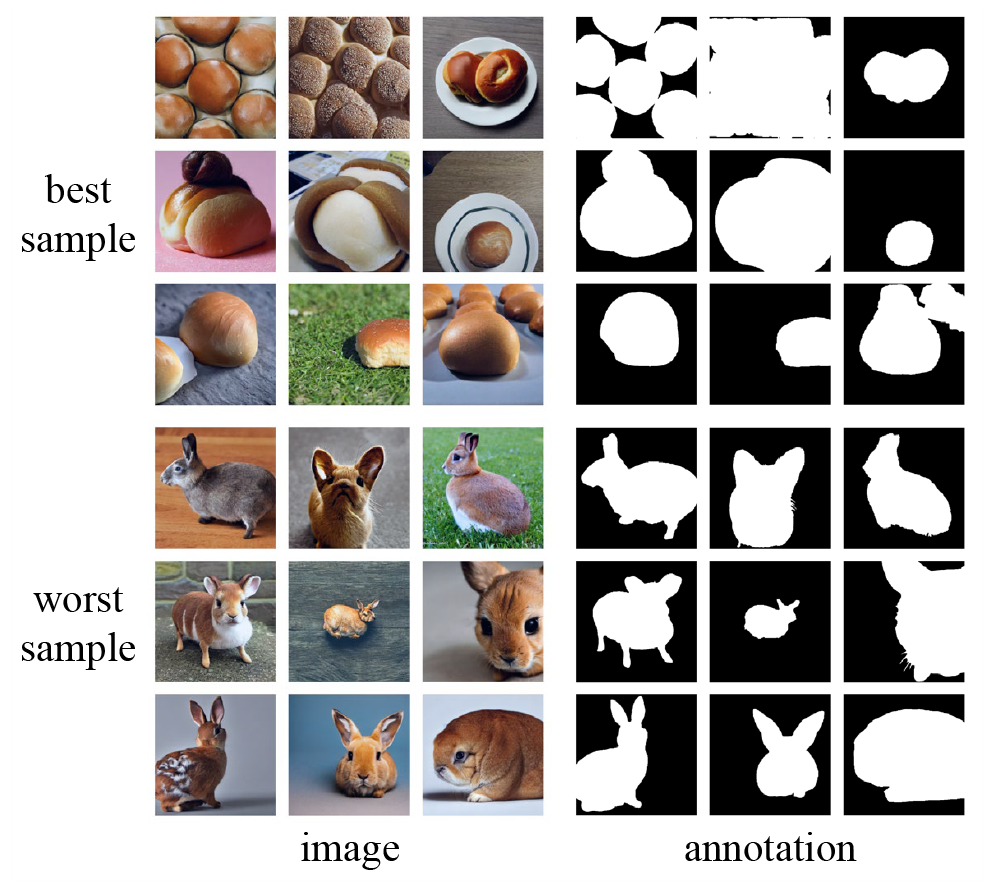

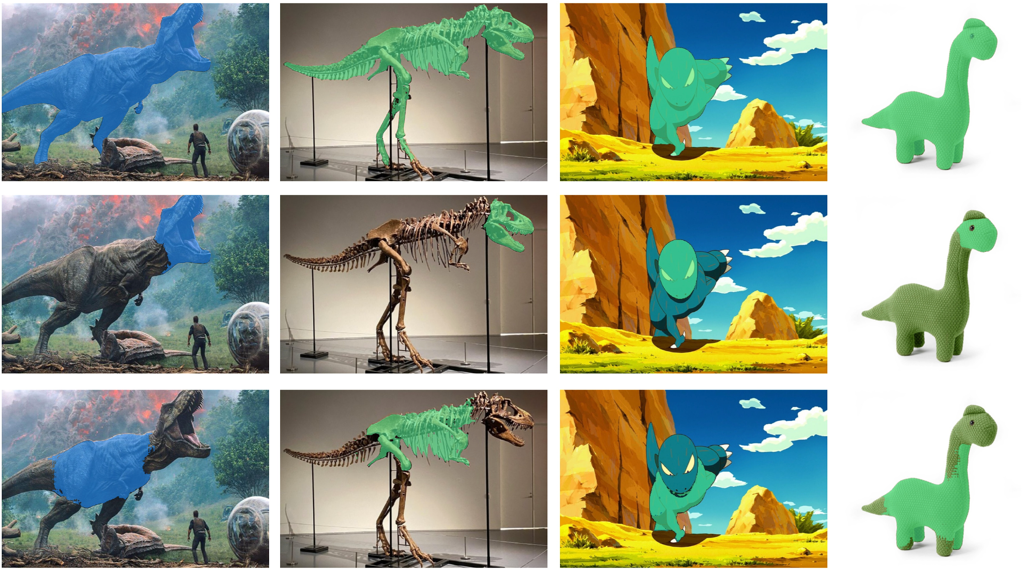

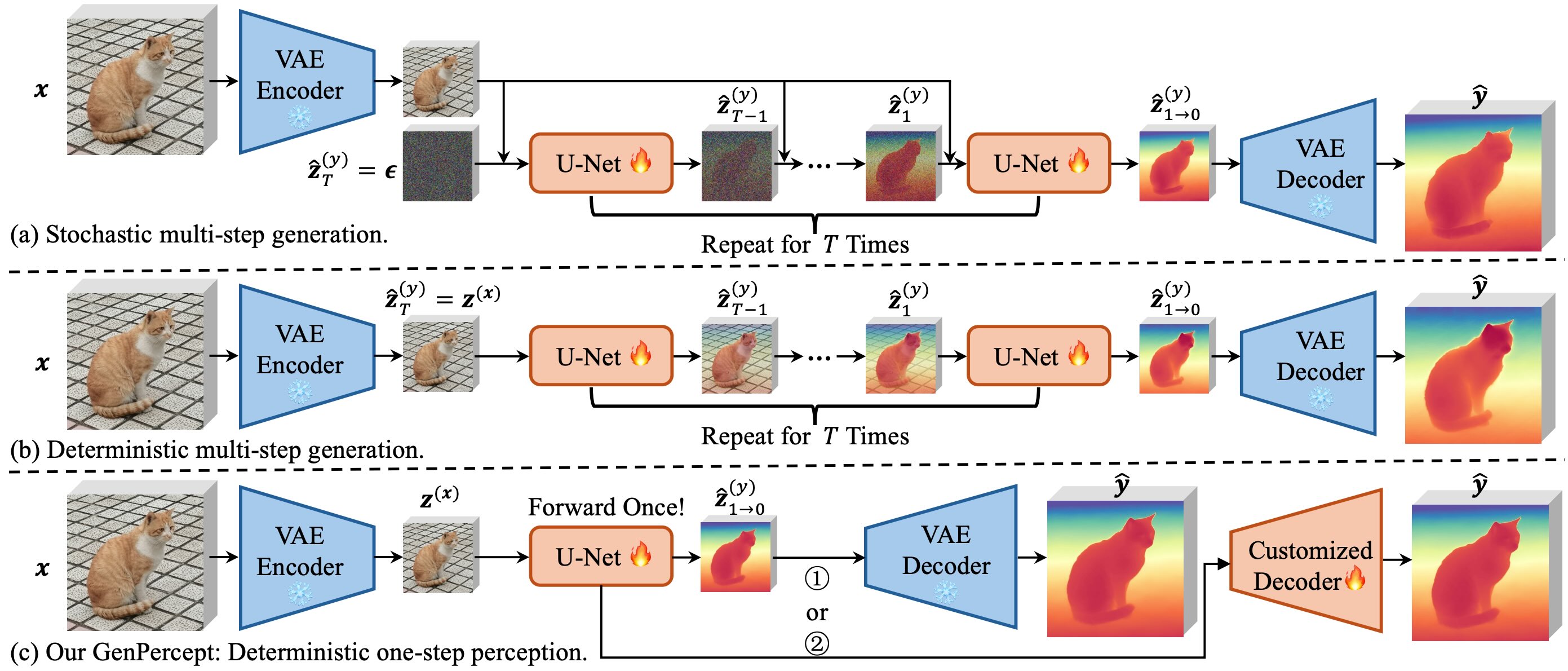

- Repurposing generative models for perception tasks: Diception, GenPercept.

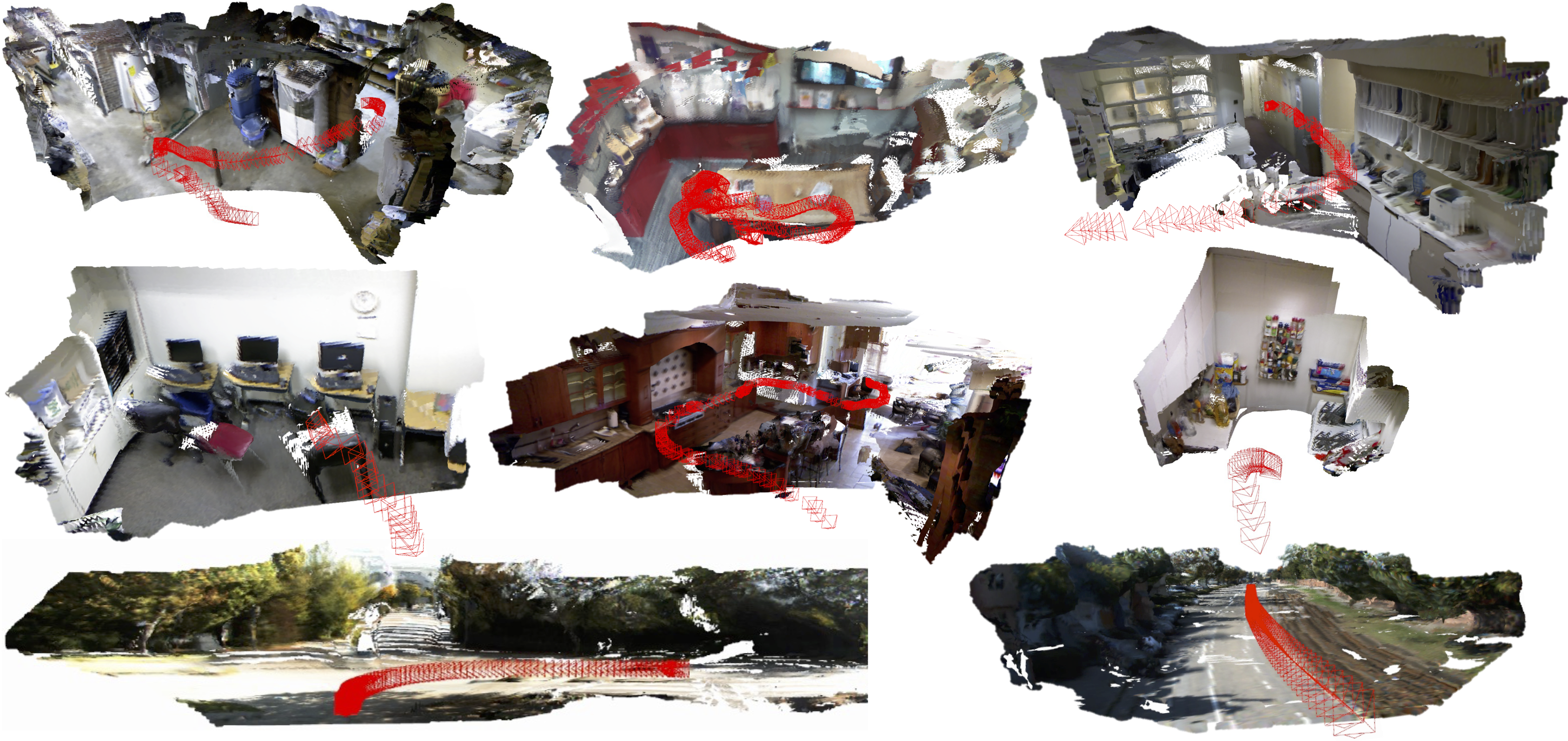

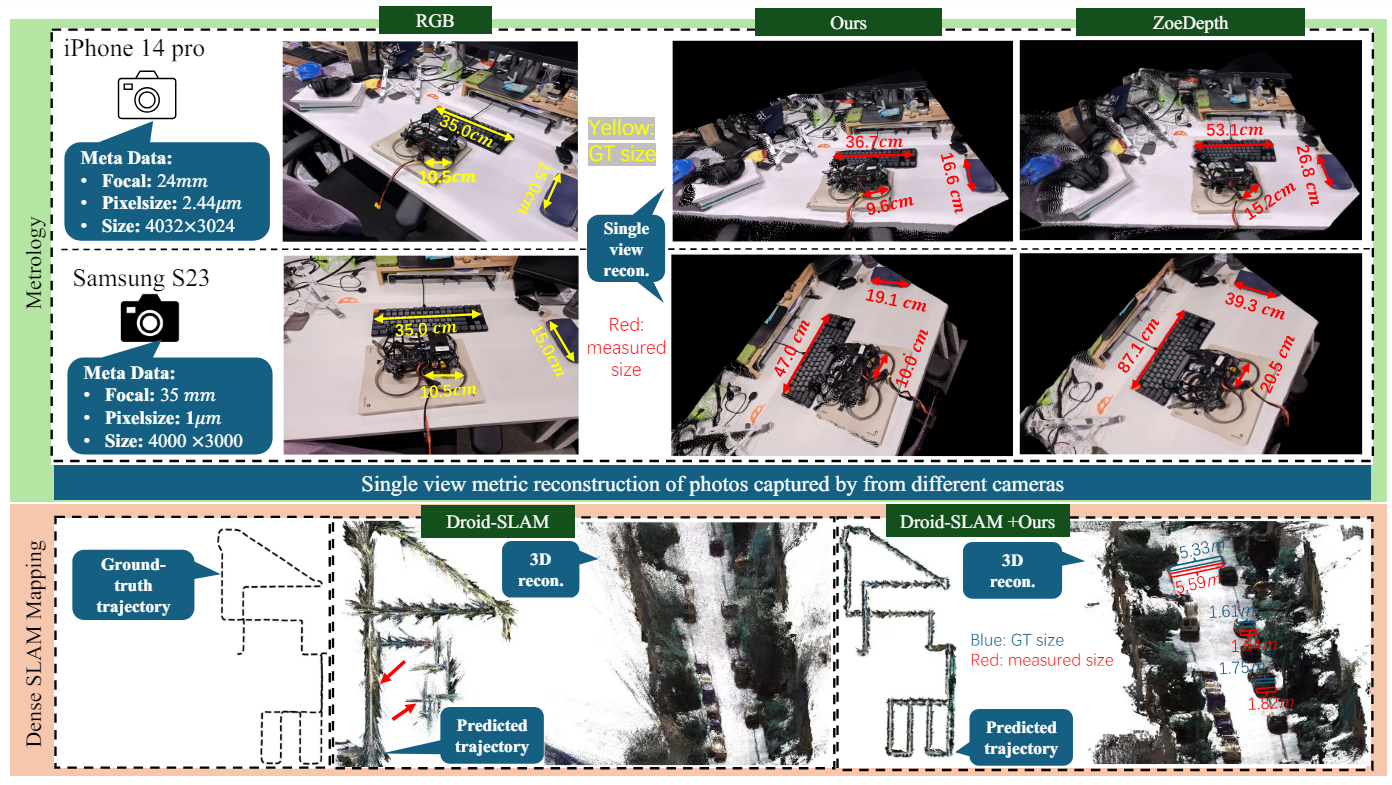

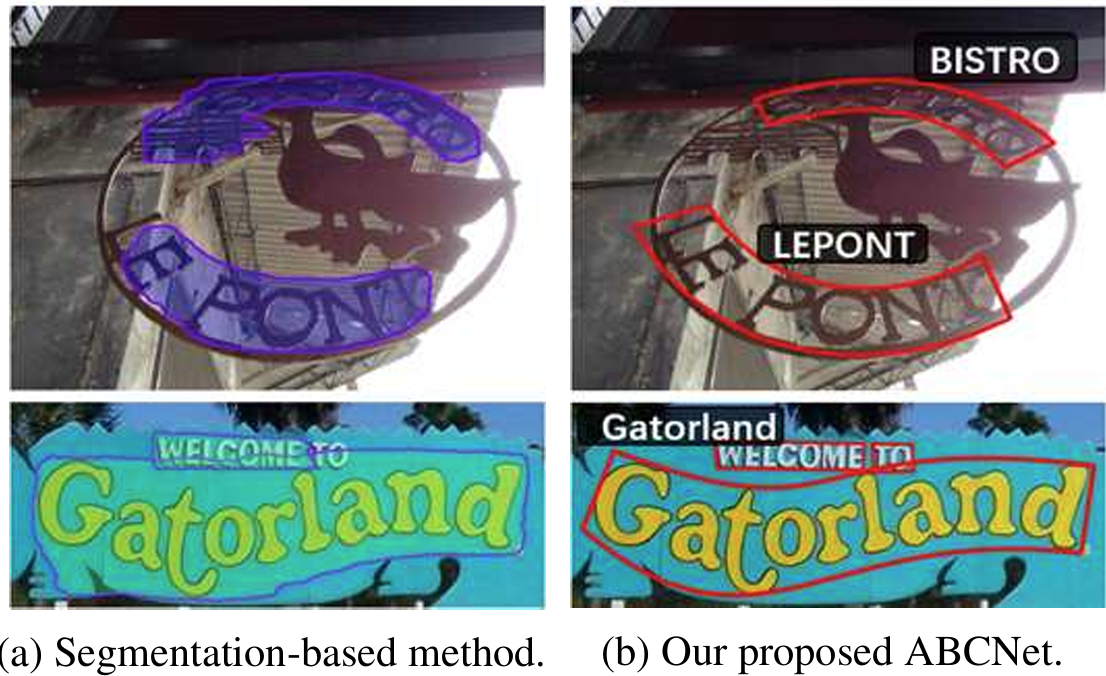

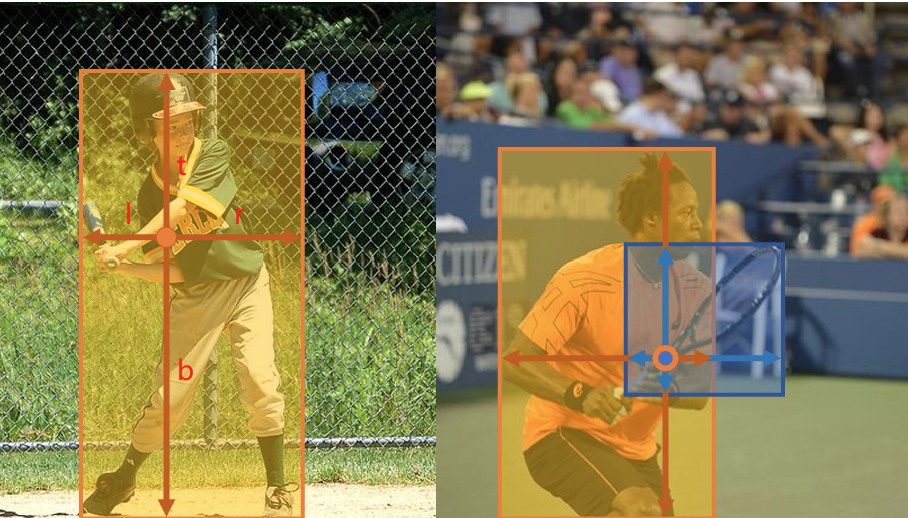

- Foundation models for perception: Metric3d, Matcher, AdelaiDet.

- Planning and action models for Embodied AI with VLMs: Odyssey.

Welcome any internship! If you want to apply for post-graduate degree, please contact me in advance!

有博后、科研助理、实习交流等岗位!如果想申请硕士、博士研究生,请提前进组实习,有科研产出的实习生将会优先录取!